There’s a long-running debate about PPC vs. SEO. Many business owners find themselves asking the question of which technique will give them the biggest return on investment. The simple answer is that both of these digital marketing strategies are strong forces on their own. However, when paired together, the two strategies make the ultimate recipe for digital marketing success. We’ve listed 5 benefits of applying a holistic approach of PPC and SEO to your digital marketing strategy.

But first… let’s talk about how these two strategies work.

What Is PPC?

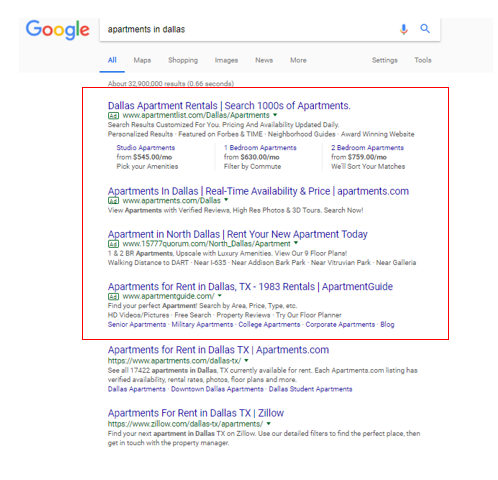

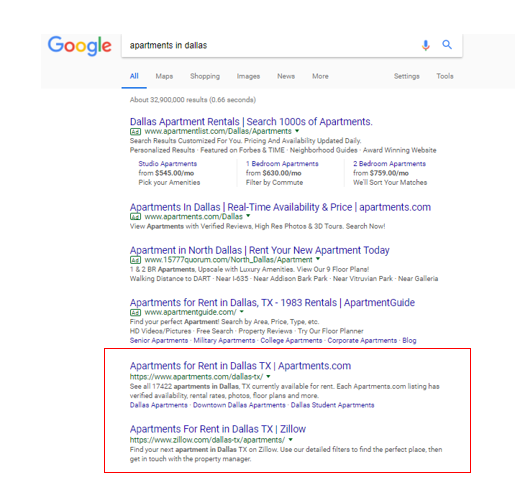

PPC stands for Pay-Per-Click, a model of digital marketing where an advertiser is charged every time their ad is clicked. PPC ads can be found at the top of the Search Engines Results Page, also known as the SERP.

What Is SEO?

SEO stands for Search Engine Optimization. With this marketing model, the goal is to obtain traffic to your website through the organic or “natural” listings. These listings can be found beneath the PPC ads within the SERP.

Now that you understand how the two strategies work, let’s talk about the benefits of having both in place.

Dominate The SERP & Increase Visibility

Dominating the SERP can greatly improve your overall online marketing performance. Not only are you going to increase traffic to your website, but you will also allocate less room for your competitors. Devising a marketing strategy that includes both PPC and SEO means that your target audience will see your brand twice. This provides that extra credibility that users look for when searching online.

It is also important to remember that SEO is a long-term investment and should be viewed as a marathon, not a sprint. It can take search engines three to six months before they register the changes and optimizations you have made to your site. On the other hand, PPC results are instant. Coupling these two strategies together will ensure that while you are making those strides to improve your organic rankings, your brand is still present to your target audience through paid ads.

Create A Cohesive Keyword Strategy

Your SEO strategy isn’t quite complete without PPC. Since there is a lot of backend work that will be performed on your site in order to increase SEO rankings, you want to make sure the keywords in your strategy will be worth the time and effort. PPC allows you to test keywords before committing to your SEO strategy.

Want to go after those local terms and also avoid killing your PPC budget? SEO can be a great way to target keywords that may otherwise eat up your PPC budget.

Improve Landing Page Optimization

Both SEO and PPC rely heavily on the quality of a website’s landing pages. In the process of optimizing your website to adhere to SEO best practices, your PPC ad’s quality score will benefit as well. Quality score is Google’s rating of the quality and relevance of your landing page, keywords, and PPC ads. The on-site optimizations made from your SEO campaign will allow you to incorporate keywords you may be bidding on in your PPC campaigns.

Re-engage Users Through PPC Audience

Having PPC and SEO run simultaneously helps ensure you reach your target audience throughout the different stages of the customer journey. For example, if a user finds your business organically the first time and does not convert, you can re-engage the user through a PPC remarketing campaign. Implementing a remarketing campaign is a great way to bring back that hard-earned traffic in hopes of converting them the second time around.

Strengthen Your SEO With PPC Ads

Some PPC ads will produce results while others won’t perform as well. This is normal. But, once the converting ads are identified, the SEO strategy is strengthened. For example, a high converting PPC ad’s headline can be implemented in the form of an SEO title tag (on-site HTML element that tells users the title of a page). Additionally, the description line of a high-converting PPC ad can also be used for an SEO meta description (on-site HTML element that summarizes a page).

When you find yourself wondering if PPC or SEO is better for your business, be sure to consider our five benefits of a comprehensive approach.

Responses